Stop guessing from CVs, measure skills objectively instead

The most reliable hiring decisions are built on structured, job-relevant skill measurement. Grounded in evidence, designed for fairness, and validated for predictive performance rather than inferred from CVs.

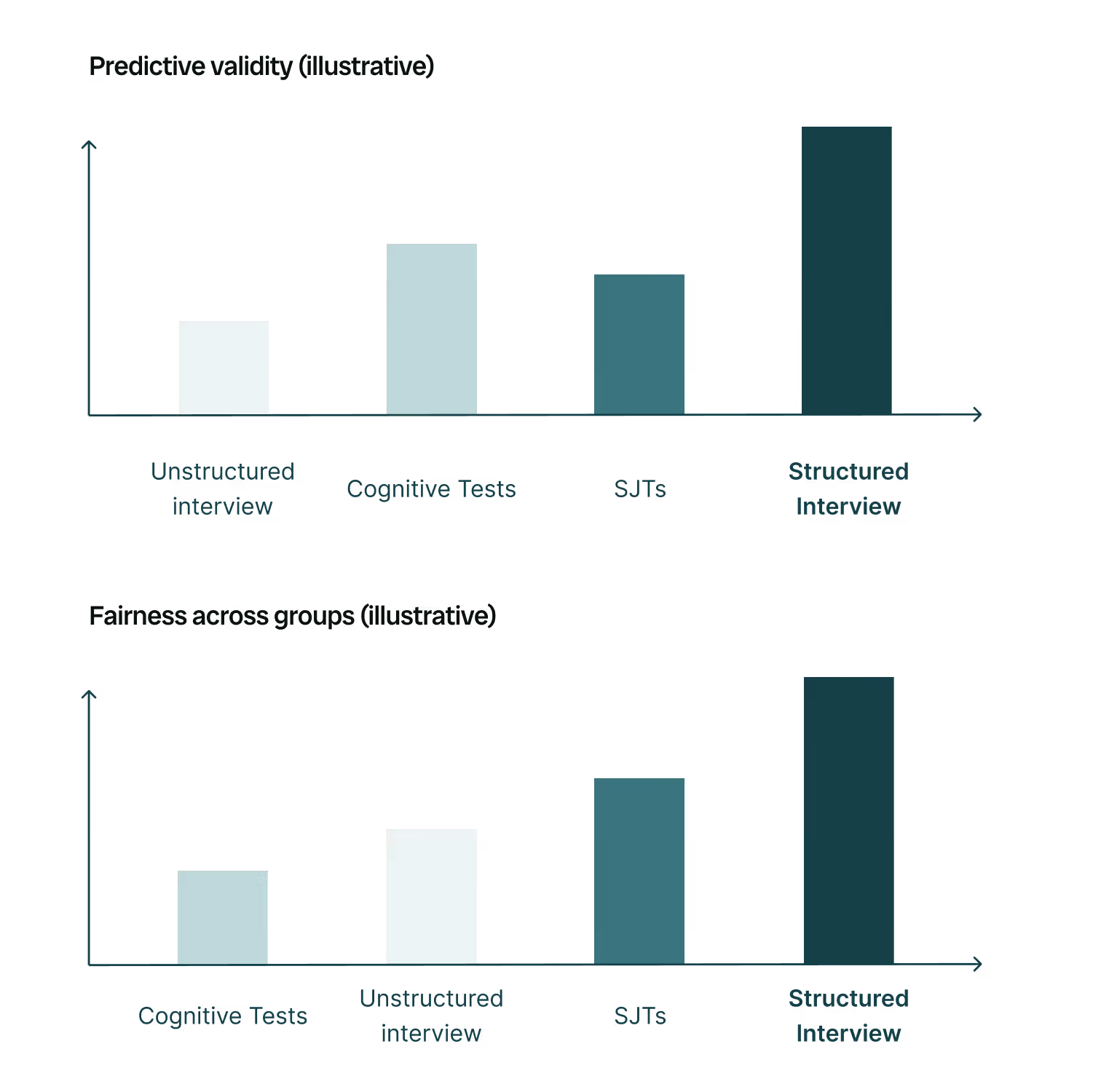

Predictive and fair selection, grounded in evidence

How structured, job-relevant methods predict performance and improve fairness.

Reliability and consistency by design

How we design scoring systems that are consistent, reproducible, and decision-ready.

Continuous fairness and adverse impact monitoring

How we test, track, and govern outcomes to reduce bias over time.

What CVs can and cannot tell you

CVs reflect where someone has been, they do not reliably show what someone can do in your role.

CVs optimize for storytelling, not job performance evidence.

Experience and education are indirect proxies; they can correlate, but they are noisy and unequal signals.

CV review produces inconsistent decisions across reviewers and over time.

Why skills-based selection is more predictive and fair

Structured, job-relevant skill assessments outperform unstructured screening by measuring the capabilities that truly drive performance.

Research shows job-specific, behaviorally grounded methods are both more predictive and more equitable; structured interviews and situational judgement tests are strong examples (Sackett et al., 2023).

This is the core design principle behind Maki’s assessments: structured tasks, observable evidence, defensible scoring.

Why experience alone can mislead hiring decisions

Past roles can bring useful knowledge; they can also introduce rigidities that limit performance in a new environment.

Prior experience can add value when prior roles are similar because people bring transferable knowledge and skills.

The benefit fades as people gain organisation-specific experience in the new context.

Experience can also carry rigidities; cognitive and behavioral habits from past roles can hinder performance, and those costs can persist.

Whether experience helps or hurts depends on individual attributes like adaptability and cultural fit.

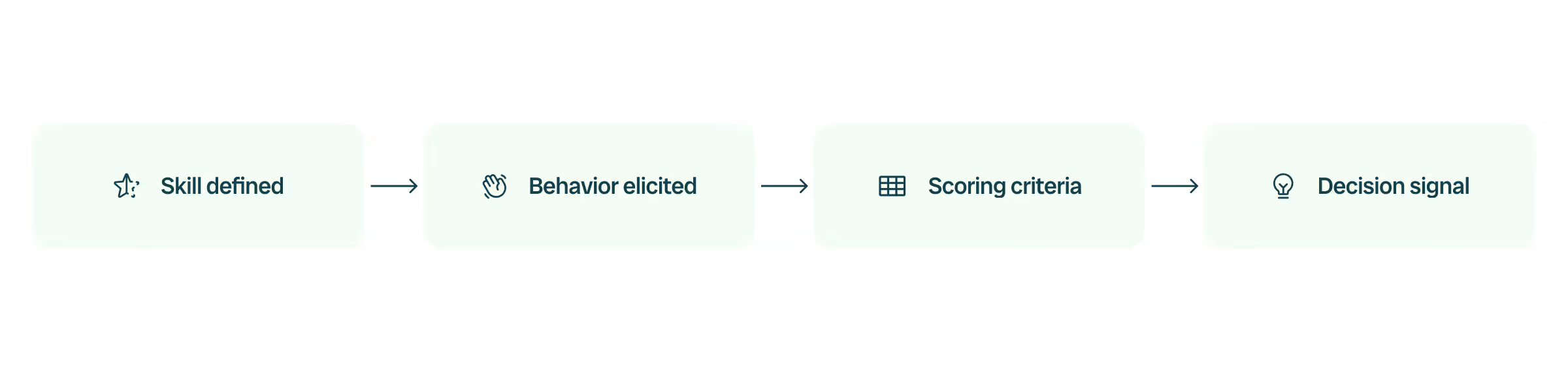

What “skill” means at Maki

Skills defined before they are measured

Every assessment starts with clearly defined job-relevant constructs, evaluated through structured tasks that generate observable evidence and decision-ready signals.

Designed tasks that reveal real capability

Candidates complete structured prompts, scenarios, and activities built to elicit job-relevant behavior, not self-presentation or keyword matching.

Decision-ready, explainable signals

Scores are tied to observable evidence and defined criteria; enabling clear, defensible hiring decisions rather than intuition or black-box rankings.

Why Maki assessments are reliable ways to measure skills

Defined constructs before measurement

Every assessment starts with clearly defined, job-relevant capabilities; not proxies like experience, education, or pedigree.

Healthy score distributions that support real decisions

Assessments are designed to meaningfully differentiate candidates; enabling defensible cut-scores and scalable hiring.

Observable, explainable evidence

Scores are tied to structured tasks and explicit criteria; not opaque inference or black-box modeling.

Fairness and validity built into the system

Skill models are calibrated, validated, and monitored to ensure they measure what they intend to measure; equitably and defensibly.

Reliability as a release requirement

Results must be consistent and reproducible; with strong alignment between AI scoring and expert human judgment before deployment.

How Maki delivers skills-based hiring in practice

From early screening to in-depth evaluation, Maki operationalizes structured skill measurement across the entire hiring funnel.

Shiro: early funnel skills screening

Mochi: structured conversational screening

Ken: deeper evaluation for high-stakes roles

Security

Technology & trust

From compliance certifications to bias audits and AI Act readiness, Maki is built on transparency, safety, and fairness.

Bias-audited AI

Explainable scoring

Ethical AI standards

.avif)